1年前

相关新闻

NO CONTEXT HUMANS

1年前

I’m not saying you should, but I’m also not saying you shouldn’t

NO CONTEXT HUMANS

1年前

Me too machine, me too.

NO CONTEXT HUMANS

1年前

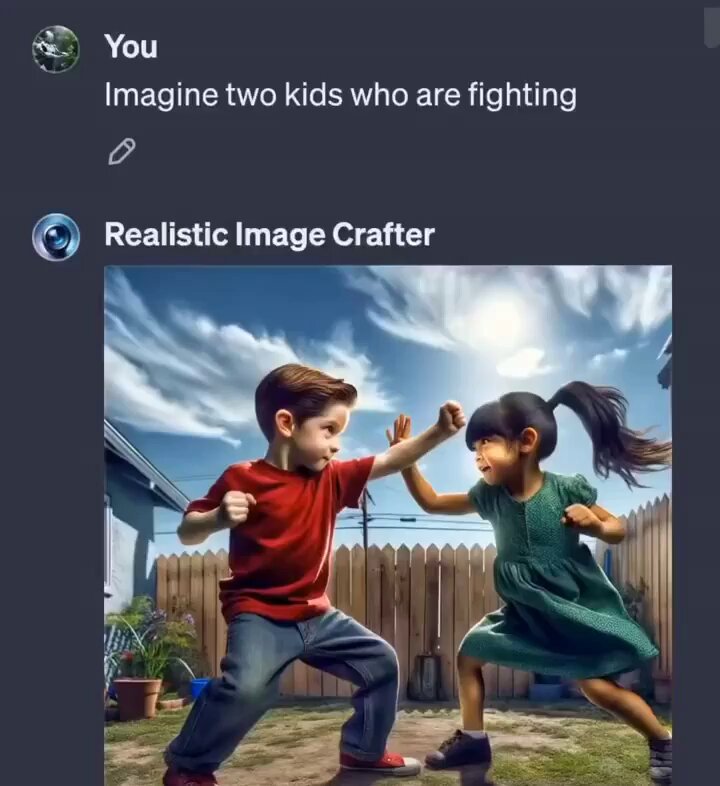

AI is wild